Deeper Dive

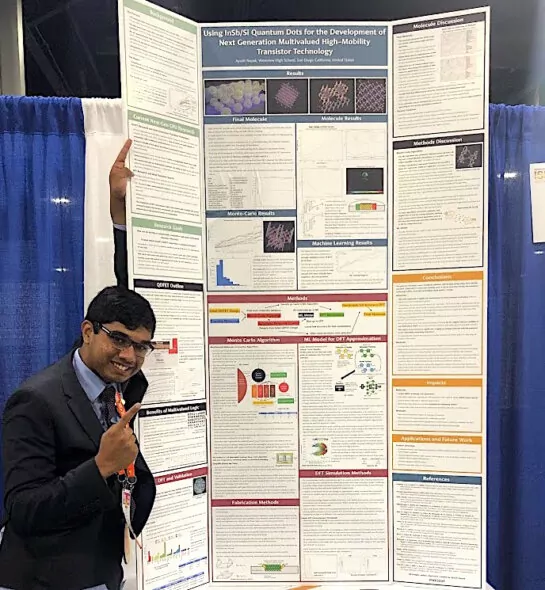

In my project I designed a new type of CPU transistor which has both ternary operation as well as having enough speed to be commercially competitive. Since the inception of the first vacuum tube computers in the 40s and all the way to the latest generation processors, binary computation has been completely dominant. Silicon transistors took over from vacuum tubes, and up until now, computational improvements have been purely through making transistors smaller. This has been fine, but now it is becoming a problem as we reach the physical limit of how small a computer chip can be made. In order to continue improving in technology, I designed a new type of transistor. Although Quantum Dot Field Effect Transistors or QDFETS exist and allow one or more intermediate states between the 1 and 0’s of traditional transistors, they have been much too slow for viable use. The performance improvements of a ternary system (around ten times) are completely outweighed by processors that take up to 1000 times longer for electricity to pass through them. In my project I turned to computational algorithms, specifically a Monte-Carlo algorithm to randomly design transistors, filtered with a machine learning model to rule out poorly performing designs. Without the resources to build every processor, I utilized a special method called Density Functional theory, to accurately simulate the quantum mechanical properties of transistors, and understand which ones would perform better. After hundreds of thousands of iterations and many hours on Google’s computer servers, I came up with a new prototype with enough speed to match modern-day transistors while still allowing for 3-valued logic, rather than binary. This is extraordinarily significant as it's one of the first viable ways to move beyond the current silicon transistor designs we’ve been using for half a century. Many other past proposed methods are nearly impossible to fabricate, but even I was able to build some micrometer scale prototypes and found positive results without the use of incredibly expensive laboratories and patented techniques. It’s one of the best, cheapest and most interesting ways we have currently and should herald a computer revolution.

The biggest roadblock in my project was the computational intensity required. From the thousands of quantum mechanical simulations that require many hours of per-core usage each to training the machine learning model on huge amounts of data, there was no way I could complete my project on a single computer. To solve this, I took advantage of cloud computing services, like Collaboratory, and other distributed systems, as well as aggressively optimized my quantum mechanical simulations. In addition, learning how to do these simulations, as the documentation is extremely fragmented, was also a huge issue. To offset this, I made extensive use of forums and discussion pages from the developers and used simulations to aid in learning to operate them. Finally, while my project relies on Quantum Dots, which are not too difficult to fabricate, creating transistors at the nanoscale is impossible without seven-figure funding, so I had to make due with a micrometer and sometimes millimeter scale models, to test certain properties.

Upgrading our computer performance almost three-fold, as my work would, would bring about incredible improvements to nearly every aspect of our current lives, from solving longstanding problems to creating brand new inventions. Computer performance improvements would mean better scientific simulations and models, for everything from quantum physics to climate change to organic molecules, allowing us to solve more problems more quickly. For everyday people, faster computing power would mean more widespread use of artificial intelligence, more powerful AI and ML models, and wider adoption of autonomous robots, from drones to cars to even robotic assistants, among innovations across the world.