Deeper Dive

I worked with my mentor, Dr. Jesse Geneson, researching the topic of mistake-bounded online learning, a form of machine learning in which the learner receives individual data points in real time, updating its model after each observation, rather than learning on the entire data set at once. Online learning is especially useful for making predictions that are affected by trends that change over time, such as weather and stock market predictions. Dr. Geneson and I studied and solved problems about the effects of weakened feedback in online learning—I was immediately captivated by the first problem Dr. Geneson showed me (an open problem studied and partially solved by a researcher named Philip Long) and was excited to begin researching.

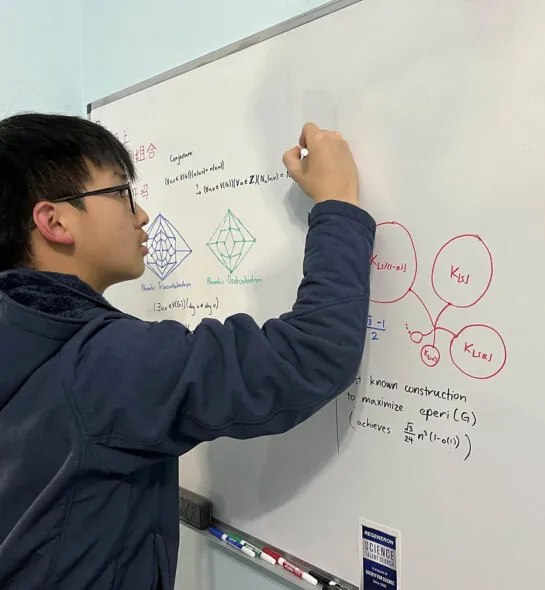

Often, I attempted a problem for hours without making tangible progress. The problems felt very daunting at times, due to the vast and elusive search space of strategies to consider. Whenever I felt like a certain approach to a problem was leaving me stuck, I took a step back and searched for new approaches and ideas. This experience taught me that in mathematical research, it pays to understand the surrounding literature very deeply. For instance, after reading Long’s method of making progress on the open problem just carefully enough to understand its steps, my first few attempts to solve the remaining case were clumsy and fruitless. Only after reading more thoroughly, going through the computations myself, and getting an intuitive grasp of the proof did I figure out how to modify Long’s strategy to tackle the final case. I owe huge thanks to my mentor Dr. Geneson, who met with me weekly to show me various possible directions to take our research, gave me feedback on my mathematical writing and advice about the research process, and supported me in so many other ways throughout the project.

Since my work studies an idealized model of online learning, it’s difficult to apply the results directly to real-world machine learning. Still, they showcase some worst-case scenarios for learning under weakened feedback and present strategies that learn effectively even in these scenarios, which can be used to adapt online learning models to learn under more adverse conditions.